Hexagonal Architecture allows us to continuously evolve a web back-end. In this post, we’ll show you how we do that. It is a continuation of a series, previous instalments demonstrated how Hexagonal architecture facilitates automated test architecture, and development of a Vue.js based front-end. In this post, we will share how we applied it in the back-end for the Online Agile Fluency Diagnostic application.

- Hexagonal Architecture

- Our domain

- Working domain driven: Commands and Queries

- Creating a diagnostic session

- Inside the command

- Creation domain logic

- Repositories

- Putting it all together, guided by tests

- Conclusion

- References

Hexagonal Architecture

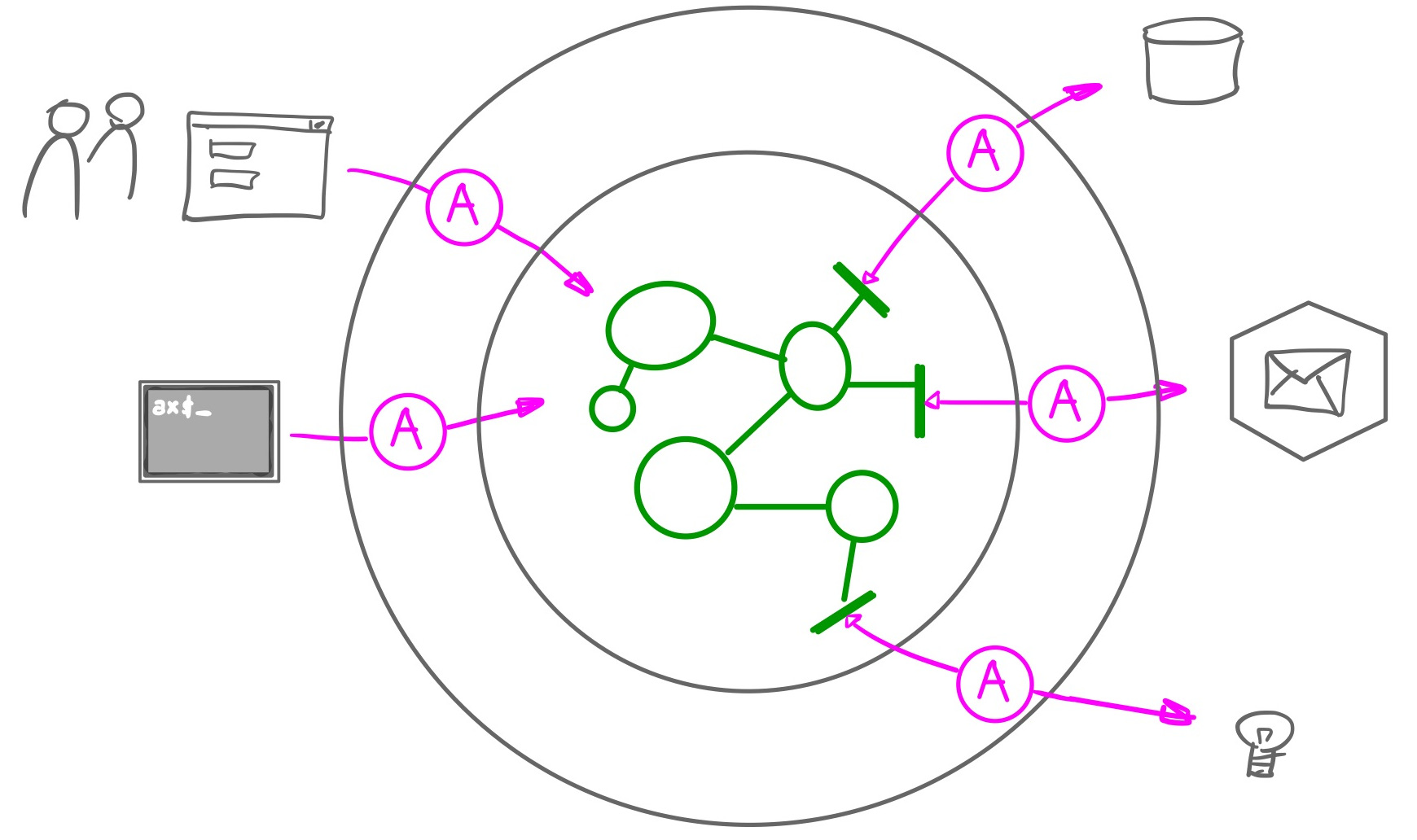

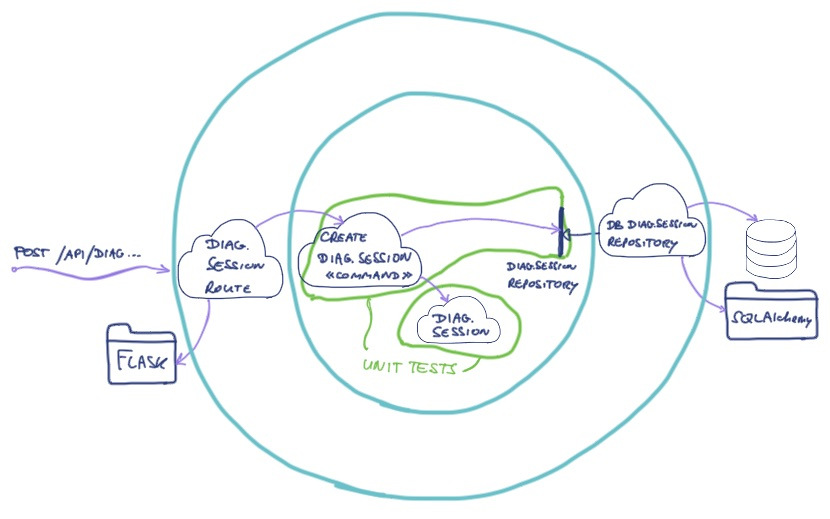

Applying the Hexagonal Architecture pattern enables us to focus on the language of our domain in the components we develop. The pattern describes that domain logic resides in the centre, mapping to and from the outside world is managed through ports and adapters, and dependencies go outside-in.

Our domain

We are working on a web application for the Agile Fluency project, to support licensed Agile Fluency facilitators to run remote diagnostic workshops with development teams. Team members fill in a survey using the application and the application creates an aggregated visualisation of the survey results in a ‘rollup chart’.

We prefer to work domain driven. We started out in spring 2020 with a quick event storming session and identified domain concepts like Facilitator, Diagnostic Session (the workshop), Survey, and Rollup Chart. We made sure that our front-end and back-end components speak this domain language, by having the components, classes and function reflect these concepts.

So how does Hexagonal Architecture manifest itself in our back-end component? What other design decisions did we make to structure our code? Let’s use creation of a new diagnostic session as an example.

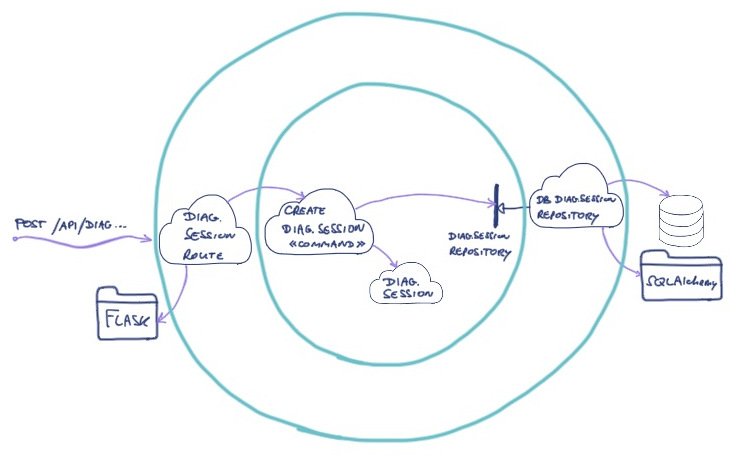

The back-end component is Python based. It provides a HTTP REST API, stores its

data in a SQLite database, and sends emails through SMTP. Relevant

domain concepts are DiagnosticSession and the CreateDiagnosticSession

command. The persistence concern is represented by a

DiagnosticSessionRepository port, an interface within our domain.

The REST API is implemented by routing code based on the

Flask library. This is a primary

adapter driving the back-end component. We have create a

DiagnosticSessionRoutes class that translates and delegates to domain objects.

The DiagnosticSessionRepository port is implemented by the

DBDiagnosticSessionRepository adapter which uses the SQLAlchemy

library and SQLite to store and retrieve data. It

takes care of any side effects from decisions taken by the domain logic.

The diagram below illustrates these different concepts.

An overview of the different adapters in our back-end component:

| Adapter | Primary/secondary | Module | Responsibility |

| DiagnosticSessionRoutes | primary | /app/adapters/routes | offer HTTP REST API; translate incoming requests to calls on domain logic |

| DBDiagnosticSessionsRepository | secondary | /app/adapters/repositories | map DiagnosticSession to/from an SQLite database; implement the DiagnosticSessionRepository interface |

| SMTPBasedMessageEngine | secondary | from quiltz.messaging library | use SMTP to send messages; implement the MessageEngine interface, which is part of our domain |

Working domain driven: Commands and Queries

We have structured our domain code in behaviour-rich domain objects and command & query objects. When a HTTP REST request comes in, the routing code transforms incoming JSON data into data objects and delegates to an appropriate Command or Query object within our domain.

The command and query objects are based on the Commands and Read Models we

have found in our event storming session. In this way, our code speaks the same

language as our domain model and we have traceability all the way. In our code

for example, we have the CreateDiagnosticSession, JoinSession, and

AnswerQuestion commands.

Commands and Read Models are patterns from Domain Driven Design. A Command represents a user’s intent to change something, a Read Model is the information a user needs to issue a command - what do you need to know to take that decision?

A Command object’s responsibility is to:

- perform validation (optional)

- look up an

aggregate

- i.e. the ‘thing’ the command is operating on

- delegate the actual work to the aggregate

- store any updates or events

- take care of other side effects, like sending out a message

We keep commands mostly free of domain decisions. Domain logic should be as much

as possible in the rich domain objects, like DiagnosticSession or

Facilitator. A command just does a lookup, delegates, takes care of side

effects. Most of the times conditional logic in a command is a smell.

A Command is an example of the Command design pattern. It is a function turned into an object, which facilitates injecting any dependencies needed for handling side-effects.

Creating a diagnostic session

Let’s have a look at some code. To create a new diagnostic session, the front

end does a POST on /api/diagnostic-sessions. The route code looks like this

(with some details left out):

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

class DiagnosticSessionRoutes(object):

@staticmethod

def withDiagnosticSessionsRepository(diagnostic_session_repository, ...):

return DiagnosticSessionRoutes(

diagnostic_session_repository=diagnostic_session_repository,

create_diagnostic_session=CreateDiagnosticSession(diagnostic_session_repository),

...)

def __init__(self, diagnostic_session_repository, create_diagnostic_session, ...):

self.diagnosticSessionsRepository = diagnostic_session_repository

self.create_diagnostic_session = create_diagnostic_session

...

def register(self, application):

route = RouteBuilder(application)

@route('/api/diagnostic-sessions', methods=['POST'], login_required=True)

def create():

result = self.create_diagnostic_session(

{**request.get_json(), **dict(facilitator=self.current_user_repository.current_user())})

if result.is_success():

return jsonify(id=str(result.id)), 201

else:

return jsonify(result.body), 400

...

We have a static creation method withDiagnosticSessionsRepository that creates

the route and instantiates commands based on injected repositories. In the

tests, we use the constructor, because it enables us to inject a test double for

commands.

RouteBuilder is a thin wrapper around Flask which helps us with for instance

securing routes when necessary with login_required.

The POST on /api/diagnostic-sessions delegates to the instance of the

CreateDiagnosticSession command. It extracts the fields of the JSON data into

a Python dictionary, adds the currently logged in facilitator to this dictionary

and passes this to the command as arguments. When successful, the command

returns the id of the new session, which is put in the HTTP response. If the

command is not successful, the body of its result will contain an error message,

which is returned in the HTTP response.

To represent the command results, we have introduced a small Results library. We

prefer to have success and failure explicitly in our code, rather than checking

for None. None erases the reason for failure, which makes it difficult to

deal with at the call-site. Result is available as part of our quiltz-domain

library on GitHub.

We have introduced a register function that allows us to write focused adapter

integration tests to cover the Flask integration:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

def createDiagnosticSessionRoutes(**kwargs):

validRouteParams = dict(

diagnostic_session_repository = None,

current_user_repository = None,

create_diagnostic_session = None,

...)

return DiagnosticSessionRoutes(**{**validRouteParams, **kwargs})

class TestDiagnosticSessionRoutes_Post(RoutesTests):

@pytest.fixture(autouse=True)

def setUp(self):

self.setup_app()

self.do_signin()

def create_routes(self):

self.create_diagnostic_session = Mock()

self.create_diagnostic_session.return_value = Success(id=aValidID(33))

return createDiagnosticSessionRoutes(

create_diagnostic_session=self.create_diagnostic_session,

current_user_repository=self.current_user_repository

).register(self.application)

def test_post_diagnostic_session_invokes_create_diagnostic_session(self):

self.client.post('/api/diagnostic-sessions',

data=json_dumps(validDiagnosticSessionCreationParameters()),

content_type='application/json')

self.create_diagnostic_session.assert_called_with(

validDiagnosticSessionCreationParameters(facilitator=aValidFacilitator())

)

We have hidden the Flask setup boilerplate in a RoutesTests base class. This

also creates a test_client for doing HTTP calls. The setUp function makes

sure all requests are performed as an authenticated user (self.do_signin()).

It also provides a current_user_repository.

The createDiagnosticSessionRoutes function conveniently provides dummy None

references for the different dependencies these routes need (in a quite Pythonic

way), so that we can focus on the ones relevant for the specific tests.

We have decided to mock the CreateDiagnosticSession command. This bears the

risk of having green tests even though the route and the actual command do not

work together, especially with Python’s flexible duck typing. Therefore we have

also created a suite of component end-to-end tests that test the full component

wired with in-memory repositories.

Inside the command

The CreateDiagnosticSession command code looks like this, with some details

left out for convenience:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

class CreateDiagnosticSession:

def __init__(self, diagnostic_session_repository, id_generator=IDGenerator(), clock=Clock()):

self.id_generator = id_generator

self.repo = diagnostic_session_repository

self.clock = clock

def __call__(self, attributes):

result = DiagnosticSessionCreator(id_generator=self.id_generator, clock=self.clock)

.create_with_id(**attributes)

if not result.is_success():

return Failure(message='failed to create diagnostic session')

self.repo.save(event=result.diagnostic_session_created)

return Success(id = result.diagnostic_session_created.id)

We have implemented the command as a Python Callable, which allows us to call it

as a function. The actual creation (domain logic) is delegated to

DiagnosticSessionCreator, a factory. After successful creation, it stores the

resulting event in a diagnostic session repository.

The command needs dependencies to do its job. We inject a diagnostic session

repository via the constructor. We also inject an IDGenerator to generate

unique ids based on UUIDs. Because generating UUIDs introduces randomness and an

Operating System dependency, we have created our own thin abstraction around it:

the ID and IDGenerator classes, which are also part of the quiltz-domain

library. This allows us to test this

command with a predictable fixed ID generator.

Because the command needs dependencies, we find it appropriate to apply the Command design pattern and turn it into class. An alternative is to use higher order functions and currying, but we prefer the explicitness of a class.

As we work test-driven, we have focused unit tests for the command:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

class TestCreateDiagnosticSession:

@pytest.fixture(autouse=True)

def setUp(self):

self.repo = Mock(DiagnosticSessionsRepository)

self.id_generator = FixedIDGeneratorGenerating(aValidID(12))

self.clock = Clock.fixed()

self.create_diagnostic_session = CreateDiagnosticSession(

diagnostic_session_repository=self.repo,

id_generator=self.id_generator,

clock=self.clock)

def test_saves_a_new_diagnostic_session_with_an_id_in_the_repo(self):

session_creator = DiagnosticSessionCreator(id_generator=self.id_generator, clock=self.clock)

self.create_diagnostic_session(validDiagnosticSessionCreationParameters())

expected_event = session_creator.create_with_id(**validDiagnosticSessionCreationParameters())

.diagnostic_session_created

self.repo.save.assert_called_once_with(event=expected_event)

def test_returns_success_if_all_ok(self):

...

def test_returns_failure_if_something_failed(self):

result = self.create_diagnostic_session(dict())

assert result == Failure(message='failed to create diagnostic session')

Here we decided to mock the DiagnosticSessionsRepository. An alternative would

be to use an InMemoryDiagnosticSessionsRepository. We find ourselves moving

away from mocking repositories and preferring in-memory variants of the

repositories, as they are behaviourally closer to the database based variants.

We inject a fixed ID generator and a fixed clock - we control time, how cool is that? We use test data builders for both the fixed id and for the diagnostic session creation parameters, to reduce clutter and irrelevant details in the test.

Creation domain logic

The DiagnosticSessionCreator is responsible for validating and creating a

valid diagnostic session:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

class DiagnosticSessionCreator:

def __init__(self, id_generator=IDGenerator(), clock=Clock()):

self.id_generator=id_generator

self.clock = clock

def create_with_id(self, team=None, date=None, ...):

return validate(

presence_of('team', team),

max_length_of('team', team, 140),

presence_of('date', date),

...

).map(lambda validParameters:

Success(diagnostic_session_created = DiagnosticSessionCreated(

timestamp=self.clock.now(),

diagnostic_session=DiagnosticSession(

id = self.id_generator.generate_id(),

team = validParameters.team,

date = validParameters.date,

...

)))

)

The create_with_id function provides default values for incoming data, so that

it can handle missing data. It validates the data using a small validation

library that is part of the quiltz-domain

library. Only if validation is

successful, a DiagnosticSessionCreated event is instantiated, containing a new

diagnostic session. If the data is not valid, a Failure object is returned by

the validation functions.

Repositories

The diagnostic sessions repository interface has just a few functions. We have expressed it using a Python abstract base class (ABC). It can save a diagnostic session event and it can provide a specific session by id or a list of all sessions:

1

2

3

4

5

6

7

8

9

10

11

12

class DiagnosticSessionsRepository(ABC):

@abstractmethod

def all(self, facilitator):

pass

@abstractmethod

def by_id(self, diagnostic_session_id, facilitator):

pass

@abstractmethod

def save(self, event):

pass

This interface is part of our domain. It describes the role a diagnostic session

repository plays. We have an in-memory implementation for tests,

and a SQLAlchemy/SQLite based implementation DBDiagnosticSessionsRepository.

The DBDiagnosticSessionsRepository has its own adapter integration test, where

we test the database mapping and the queries against an in-memory SQLite

database. For each test case, a database is created and schema migrations are

run so that the database schema corresponds to the current schema. This all runs

in milliseconds.

Putting it all together, guided by tests

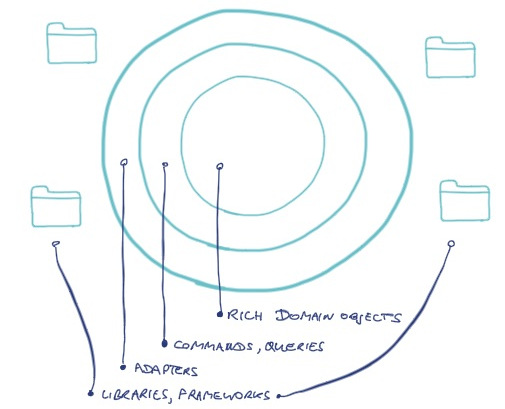

Looking back on how we have applied Hexagonal Architecture principles, we distinguish a number of rings, where the ‘domain’ consist of a ring of commands and queries and a core of rich domain objects.

The Hexagonal Architecture pattern provides a lens to look at our automated tests. Our domain objects and commands have unit tests with limited scope (indicated by the green lines):

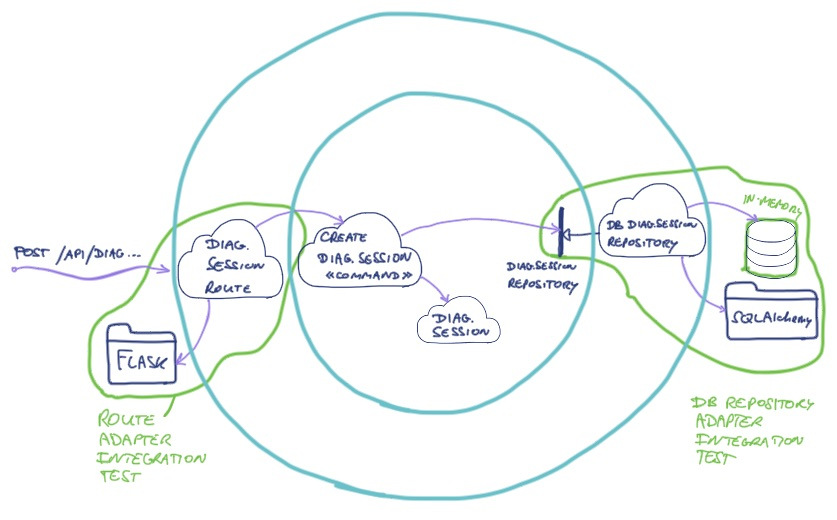

We have created a primary adapter for the HTTP routes and a secondary adapter for the SQLAlchemy/SQLite based diagnostic session repository. They have their own adapter integration tests that test how our code integrates with the different libraries:

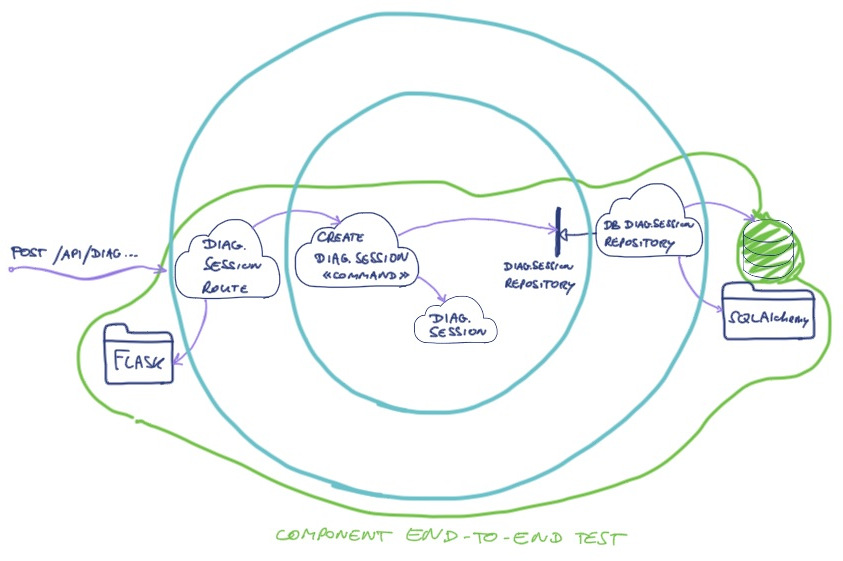

We also have a suite of component end-to-end tests, which test the whole back-end component through its REST API. This end-to-end test plugs in in-memory repository adapters to improve speed and reliability. We don’t need to run the end-to-end test against a real database, because our adapter integration tests cover that concern extensively.

The component end-to-end test run fast enough to include in the back-end automated test suite. The whole suite of over 560 tests runs in under 10 seconds, giving us rapid feedback on every line we change.

Conclusion

In this post, we have shown how we have applied the Hexagonal Architecture pattern and how it helped us to let different concerns find their place in the code. It helps to decide What To Put Where. It also helped us in creating a fast and focused test suite that gives rapid feedback when developing.

Hexagonal Architecture fits well with Domain Driven Design. It facilitates using domain language in the core of your components.

References

- The Domain Driven Design community dddcommunity.org is a good starting point for further exploration

- Event Storming is a powerful collaborative domain modelling technique invented by Alberto Brandolini; who is writing a book on this - recommended!

- We work test-driven, because the discipline of Test Driven Development greatly helps us to deliver working software continuously; the Growing Object Oriented Software, Guided by Tests book by Nat Pryce & Steve Freeman is a recommended starting point. Or contact us if you’re interested in a workshop.

This post is part of a series on Hexagonal Architecture:

- Hexagonal Architecture

- TDD & Hexagonal Architecture in front end - a journey

- How to decide on an architecture for automated tests

- How to keep Front End complexity in check with Hexagonal Architecture

- A Hexagonal Vue.js front-end, by example

- Hexagons in the back end - an example

Credits: